Tesla Installs New Cameras on Validation Vehicles, Signaling Possible Hardware Update or Training Overhaul

A new fleet of Tesla engineering validation vehicles was spotted in Los Gatos, California, fitted with unusual hardware. Unlike the standard validation mules we’ve observed for years — typically equipped...

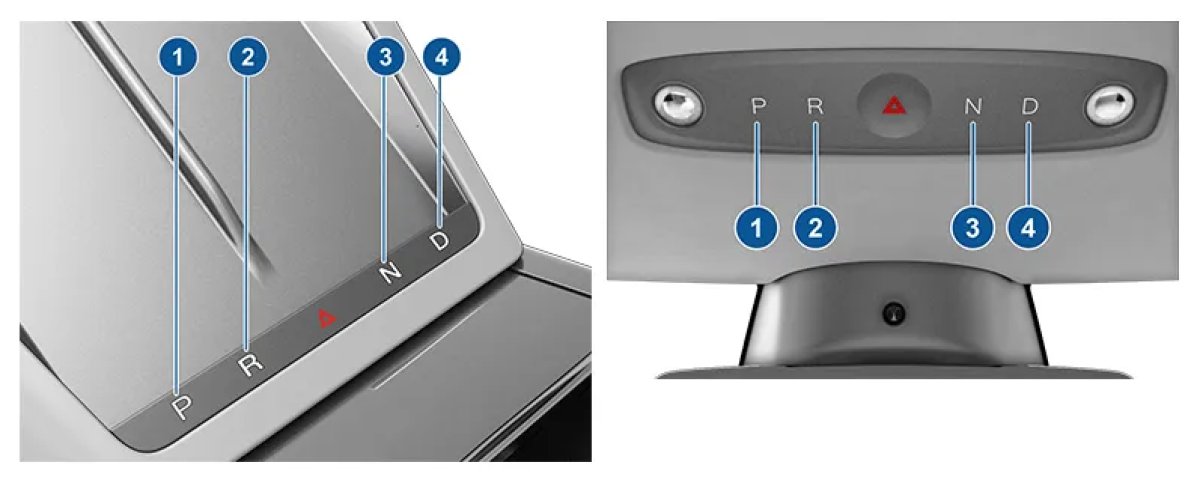

These temporary-looking brackets hold wide-angle cameras mounted low and angled outward. While Tesla regularly trials new sensors, the specific placement—targeting frontal blind spots that current camera arrays struggle to capture—strongly suggests Tesla is collecting data to address two high-priority problems: Summon/Actually Smart Summon and Banish.

Establishing ground truth

Tesla’s vision approach relies heavily on occupancy networks—models that predict the presence and motion of objects in blind spots by remembering what the vehicle saw seconds earlier. That temporal memory is critical, especially for the Cybertruck, because its front-facing cameras cannot observe certain areas around the bumper.

For precise, low-speed maneuvers, however, memory alone isn’t sufficient. The new corner-mounted cameras, when used in conjunction with high-fidelity roof LiDAR, appear designed to create a ground-truth dataset of the immediate area around the bumper. This combination can generate highly accurate pixel-level maps of the ground and nearby obstacles.

Tesla will likely use that dataset to train FSD neural networks to better estimate distance, shape, and volume in the forward blind zones. In short, the company is teaching the software to infer accurate obstacles where production cameras have a blind spot, reducing the risk of clipping a curb or fender during tight maneuvers.

The Banish bottleneck

The testing has added urgency because Banish is central to Tesla’s Robotaxi and FSD Unsupervised ambitions, and the Cybertruck currently lacks Actually Smart Summon. While other vehicles in the fleet have moved to FSD v14, Actually Smart Summon still runs on an older legacy stack that hasn’t been migrated to Tesla’s unified end-to-end neural architecture; that legacy code depends on Autopilot components that were never ported to the Cybertruck.

Banish—the capability for a vehicle to drop off a passenger, then autonomously roam a parking lot to find a space—demands reliable performance in complex, unpredictable environments without a human driver. Promised since FSD v12.5 but not yet fully delivered, Banish tolerates virtually no margin for error. Achieving it requires near-millimeter-level accuracy from vision models covering the forward blind spots.

Implications for future hardware

Although the corner mounts in these photos are clearly provisional, they raise the question of whether Tesla will add permanent low-mounted cameras in future hardware revisions or solve the issue purely through software. Rivals such as Lucid and Rivian supplement high-mounted cameras with additional corner cameras, radar, or ultrasonic sensors to address similar blind spots. Tesla previously removed ultrasonic sensors to reduce cost, complexity, and sensor conflicts, and its reliance on high-mounted cameras has proven challenging in some scenarios.

Many have long requested bumper-corner cameras to eliminate the front blind spot and improve visibility around obstructed intersections; current designs often require the vehicle to move forward before B‑pillar cameras can see around obstructions. Despite that demand, a retrofit or mid-cycle hardware refresh that adds these cameras to existing vehicles seems unlikely. The most plausible explanation is that Tesla is using these temporary sensors to collect targeted data and refine its software to work better with the hardware already in production.